Mask2Former Unified Mask

Classification for All Segmentation Tasks

Mask2Former is a simple, yet powerful framework for image segmentation that unifies the architecture for panoptic, instance, and semantic segmentation tasks.

About Mask2Former

Mask2Former is a cutting-edge deep learning model for panoptic, instance, and semantic segmentation. It unifies mask classification across tasks, delivering accurate, efficient, and versatile segmentation in a single framework for computer vision applications.

Revolutionizing Image Segmentation

“Revolutionizing image segmentation transforms how computers understand visual data, enabling precise detection, classification, and separation of objects. Advanced models like Mask2Former unify panoptic, instance, and semantic segmentation, delivering faster, more accurate, and versatile results.

Key Features

Mask2Former introduces several innovative features that set it apart from previous segmentation models

Unified Architecture

Single model for panoptic, instance, and semantic segmentation tasks, eliminating the need for task-specific architectures.

Mask Classification

Treats segmentation as mask classification, predicting a set of binary masks each associated with a class prediction.

Efficient Training

Masked attention mechanism speeds up convergence by 3x compared to standard transformer models.

State-of-the-Art Performance

Achieves top results on COCO panoptic segmentation, instance segmentation, and ADE20K semantic segmentation.

Simplified Pipeline

Eliminates many hand-crafted components like non-maximum suppression and anchor generation.

Easy Implementation

Simple and modular codebase with support for popular deep learning frameworks.

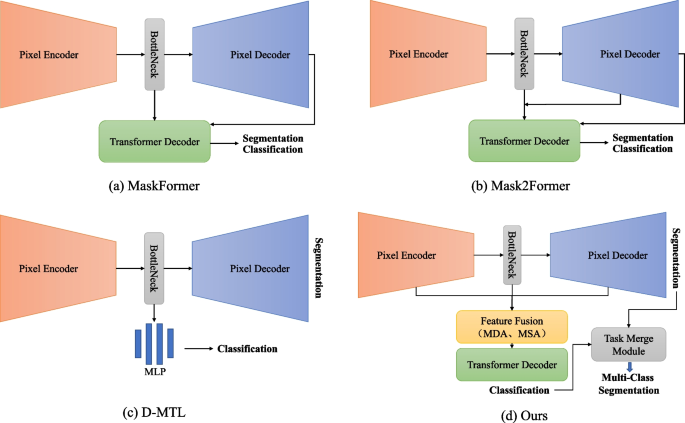

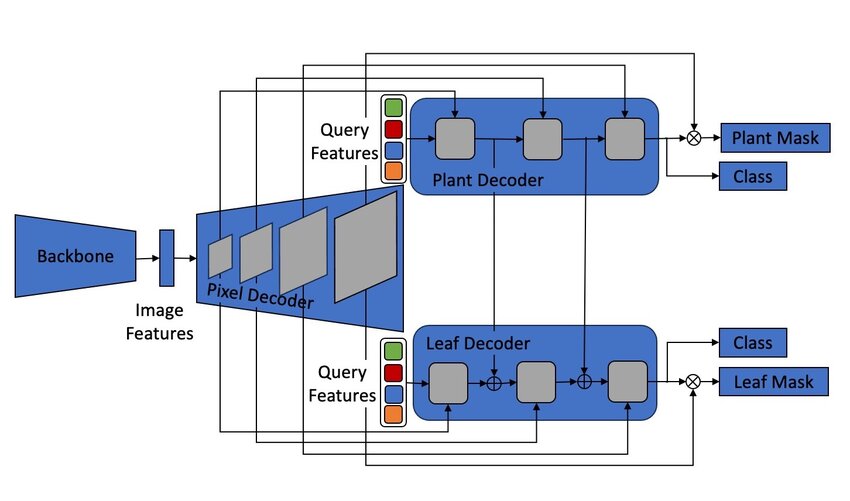

Architecture

Mask2Former’s architecture consists of three main components: a backbone, a pixel decoder, and a transformer decoder.

Backbone

Extracts multi-scale feature maps from the input image using a standard CNN (e.g., ResNet) or transformer backbone.

Pixel Decoder

Gradually upsamples the feature maps to generate high-resolution per-pixel embeddings.

Transformer Decoder

Uses masked attention to predict a set of object queries and their associated masks and class labels.

Applications

Mask2Former can be applied to various computer vision tasks that require precise pixel-level understanding.

Autonomous Driving

Scene understanding for self-driving cars, identifying roads, vehicles, pedestrians, and traffic signs.

Robotics

Object manipulation and navigation by providing detailed segmentation of the environment..

Medical Imaging

Precise segmentation of organs, tumors, and other anatomical structures in medical scans..

Remote Sensing

Land cover classification, urban planning, and environmental monitoring from aerial imagery.

Performance

Mask2Former achieves state-of-the-art results on major segmentation benchmarks while being more efficient than previous approaches.

57.8%

PQ on COCO

57.7%

mIoU on ADE20K.

3x

Faster Convergence

50.1%

AP on COCO

Installation

Getting started with Mask2Former is straightforward with our provided code and pretrained models.

Install Dependencies

Install PyTorch, Detectron2, and other required packages.

Clone Repository

Clone the Mask2Former repository from GitHub.

Configure Model

Set up the configuration file for your specific task and dataset.

Run Inference

Use the provided demo code to run segmentation on your images.

# Install the required dependencies

pip install torch torchvision

pip install git+https://github.com/facebookresearch/Mask2Former.git

# Load a pretrained model and perform inference

from mask2former import add_maskformer2_config

from detectron2.config import get_cfg

from demo.predictor import VisualizationDemo

# Setup config

cfg = get_cfg()

add_maskformer2_config(cfg)

cfg.merge_from_file("configs/coco/panoptic-segmentation/swin/maskformer2_swin_large_IN21k_384_bs16_100ep.yaml")

cfg.MODEL.WEIGHTS = "model_final.pkl"

# Create predictor

predictor = VisualizationDemo(cfg)

# Run on an image

outputs = predictor.run_on_image(image)console.log( 'Code is Poetry' );

Resources

Access the paper, code, models, and other resources to get started with Mask2Former.

Research Paper

Read the original Mask2Former paper published at CVPR 2022 for technical details and experimental results.

Demos & Tutorials

Try our online demo and follow step-by-step tutorials to implement Mask2Former in your projects.

Model Zoo

Download pre-trained models for various segmentation tasks and backbones.

Code Repository

Access the official implementation on GitHub with training and inference code, and pre-trained models.

Frequently Asked Questions

What is Mask2Former in deep learning?

A unified framework for semantic, instance, and panoptic segmentation.

How does Mask2Former improve image segmentation?

It uses transformers to generate accurate mask predictions.

Is Mask2Former only used for semantic segmentation?

No, it supports semantic, instance, and panoptic segmentation.

Who introduced the Mask2Former model?

Facebook AI Research (FAIR).

Why is Mask2Former considered a unified framework?

Because one model handles multiple segmentation tasks.

How does Mask2Former use transformers for segmentation?

It applies attention across image features to predict masks.

What role do attention mechanisms play in Mask2Former?

They capture global context for better segmentation accuracy.

How does Mask2Former differ from Mask R-CNN?

Mask2Former is transformer-based, while Mask R-CNN is CNN-based.

What is the function of query-based masks in Mask2Former?

Queries represent objects or regions and generate masks.

Does Mask2Former use pixel-level or region-level segmentation?

It focuses on region-based mask prediction.

Can Mask2Former be applied in medical image analysis?

Yes, for tasks like tumor and organ segmentation.

How is Mask2Former used in autonomous driving systems?

It helps detect roads, pedestrians, and vehicles.

Does Mask2Former support video segmentation tasks?

Yes, with adaptations for temporal consistency.

What industries benefit most from Mask2Former?

Healthcare, robotics, automotive, and surveillance.

Can Mask2Former improve object detection performance?

es, by combining detection with segmentation.

How does Mask2Former compare to Segmenter?

Mask2Former shows better accuracy and flexibility.

What datasets are commonly used to train Mask2Former?

COCO, ADE20K, and Cityscapes.

Does Mask2Former achieve state-of-the-art accuracy?

Yes, across multiple segmentation benchmarks.

How does Mask2Former handle overlapping objects?

By using distinct queries for each instance.

Is Mask2Former more efficient than traditional CNN-based methods?

Yes, in accuracy, but may require higher computation.

Is Mask2Former available in PyTorch or TensorFlow?

Yes, mainly in PyTorch implementations.

Can developers fine-tune Mask2Former for custom datasets?

Yes, it supports transfer learning.

What challenges does Mask2Former still face?

High computational cost and memory usage.

How might Mask2Former evolve with future AI models?

Likely to become faster and more efficient.

Is Mask2Former suitable for real-time segmentation tasks?

Not yet ideal, but ongoing research is improving speed.